About

METR (pronounced ‘meter’) is a nonprofit that evaluates frontier AI models to help companies and wider society understand AI capabilities and what risks they pose.

Most of METR’s research consists of evaluations assessing the extent to which an AI system can autonomously carry out substantial tasks, including general-purpose tasks like conducting research or developing an app, and concerning capabilities such as conducting cyberattacks or making itself hard to shut down. Recently, we’ve begun studying the effects of AI on real-world software developer productivity as well as potential AI behavior that threatens the integrity of evaluations and mitigations for such behavior.

Technical challenge

A challenge for the 3blue1brown audienceFeatured work

Uplift study

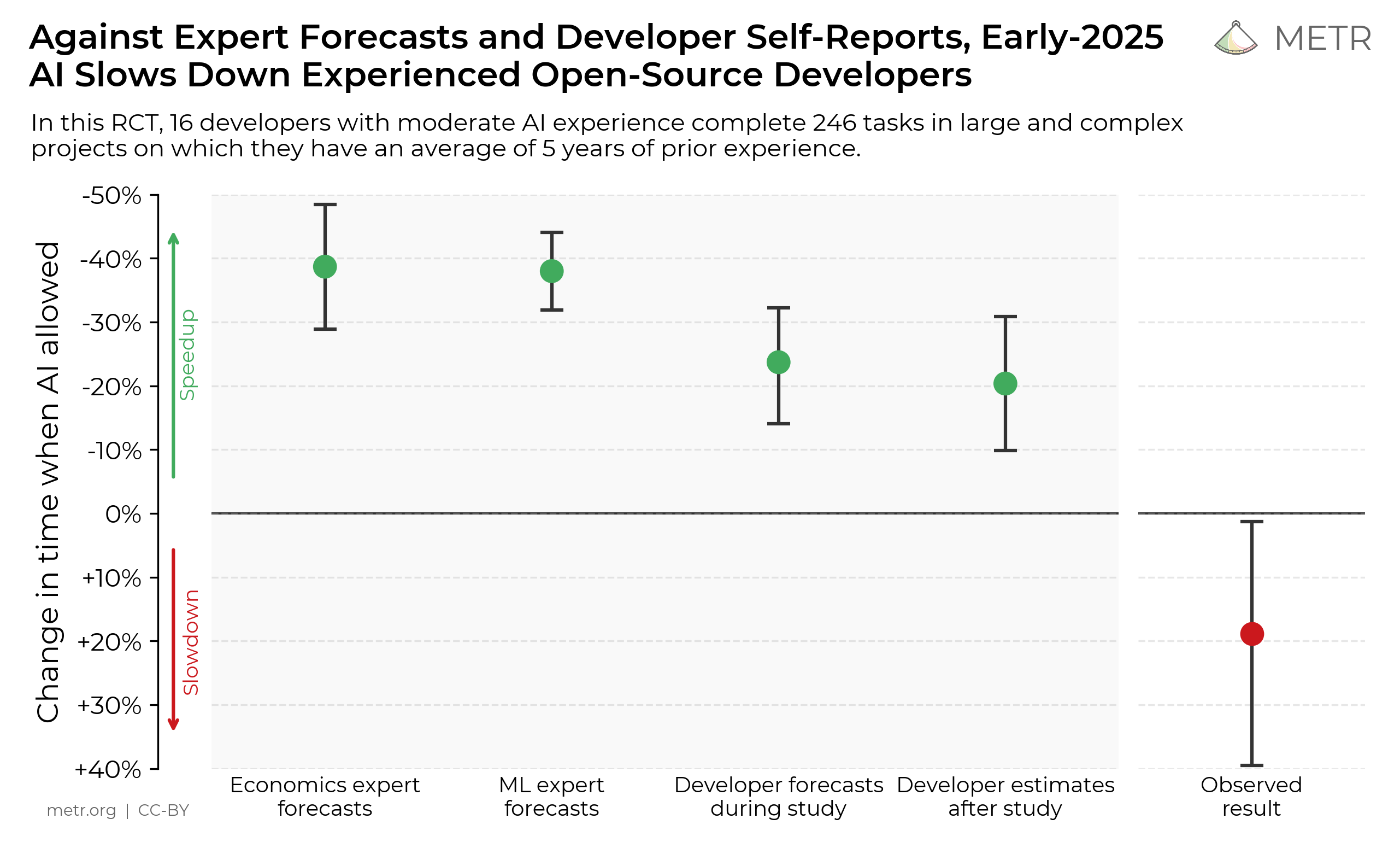

We conducted a randomized controlled trial (RCT) measuring how early-2025 AI tools affected the productivity of 16 experienced open-source developers working on large, mature codebases (avg. 5 years xp w/ repo). Developers completed 246 real issues, which were randomly assigned to either allow or disallow AI usage. Surprisingly, we found that when developers used AI tools, they took 19% longer—AI slowed them down. This contrasted sharply with both the developers' own expectations and expert forecasts (24% and 38-39% shorter time to complete tasks when allowed to use AI, respectively).

Read the full result

Time horizon study

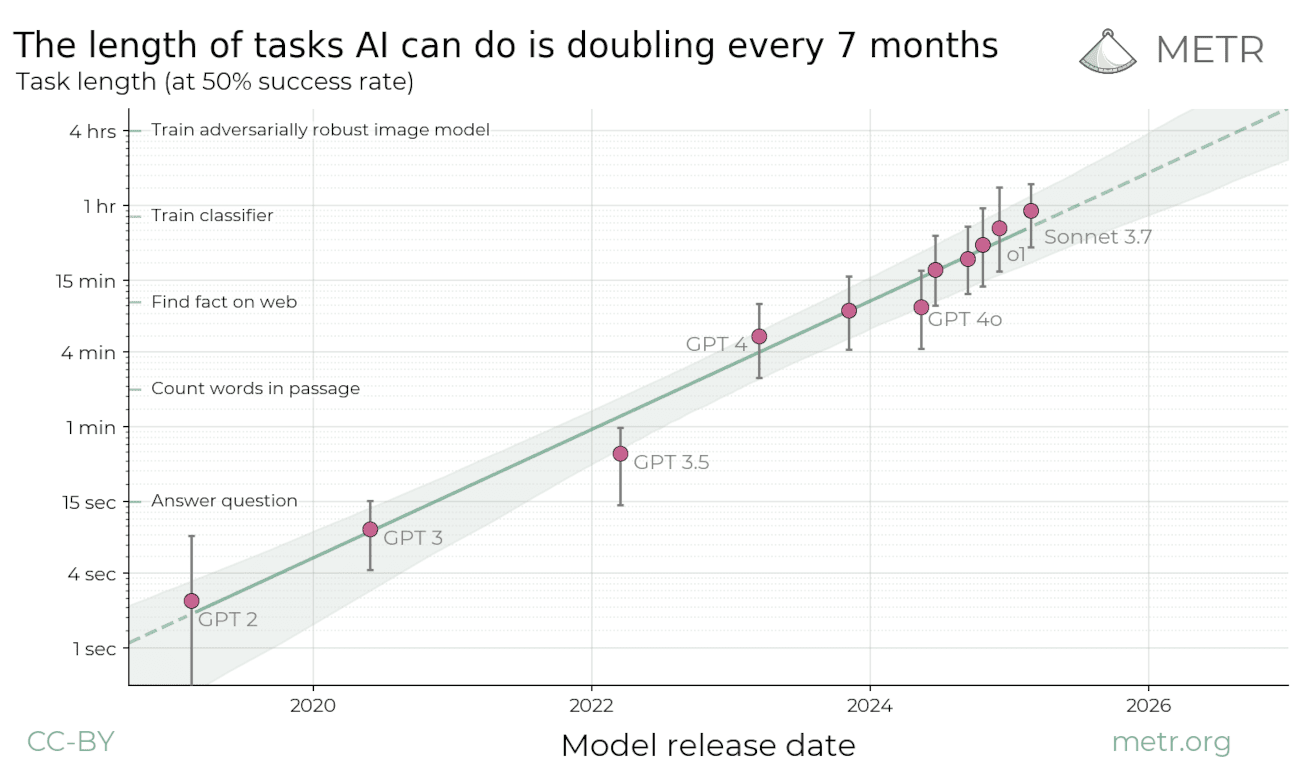

We propose measuring AI performance in terms of the length of tasks AI agents can complete. We show that this metric has been consistently exponentially increasing over the past 6 years, with a doubling time of around 7 months. Extrapolating this trend predicts that, in under a decade, we will see AI agents that can independently complete a large fraction of software tasks that currently take humans days or weeks.

Read the full result