Chapter 14Taylor series

"To many, mathematics is a collection of theorems. For me, mathematics is a collection of examples; a theorem is a statement about a collection of examples and the purpose of proving theorems is to classify and explain the examples."

- John B. Conway

Introduction

When I first learned about Taylor series, I definitely didn't appreciate how important they are. But time and time again they come up in math, physics, and many fields of engineering because they're one of the most powerful tools that math has to offer for approximating functions.

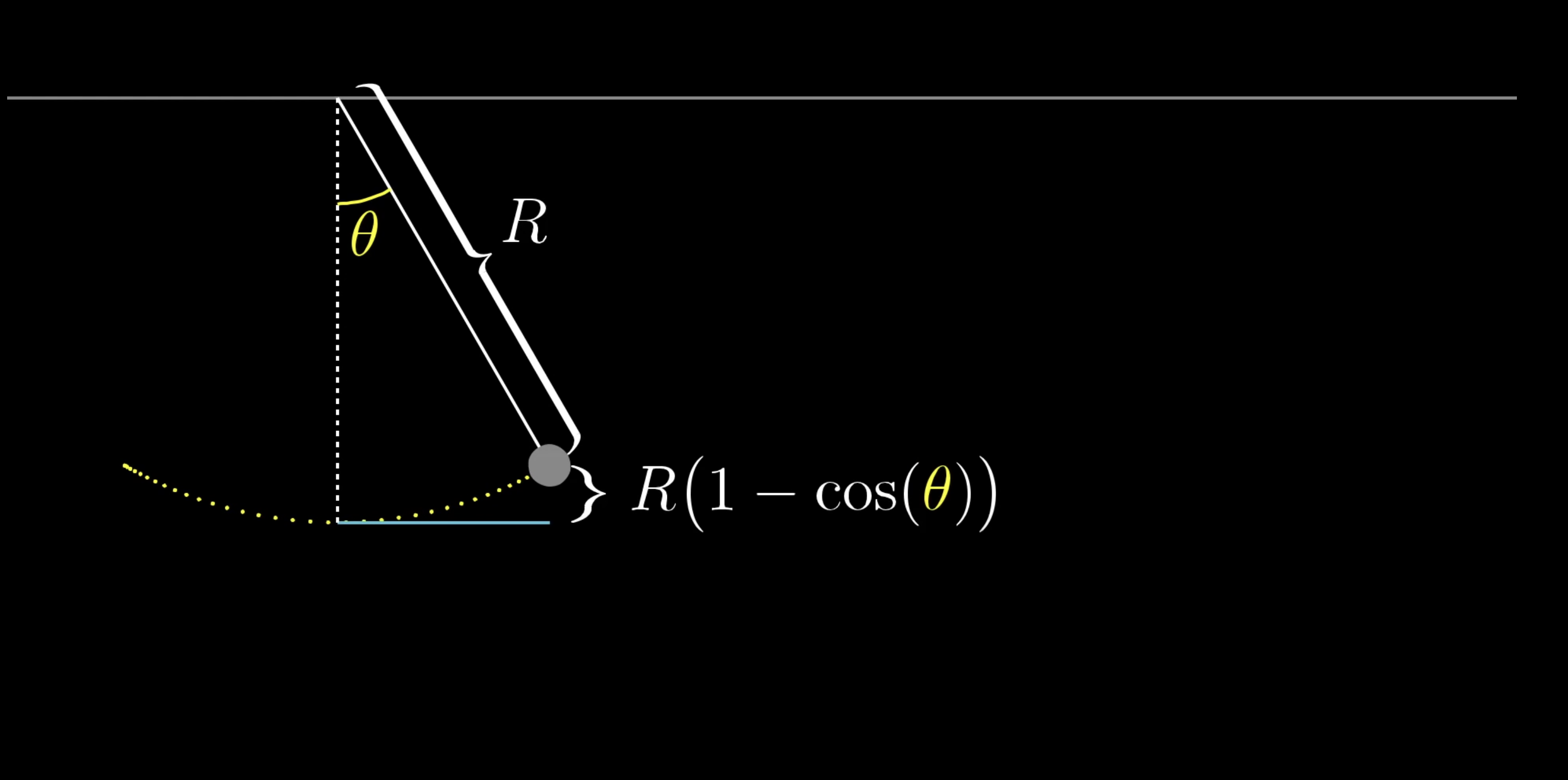

One of the first times this clicked for me as a student was not in a calculus class, but in a physics class. We were studying some problem that had to do with the potential energy of a pendulum, and for that you need an expression for how high the weight of the pendulum is above its lowest point, which works out to be proportional to one minus the cosine of the angle between the pendulum and the vertical.

The specifics of the problem we were trying to solve are beyond the point here, but I'll just say that this cosine function made the problem awkward and unwieldy. But by approximating as , of all things, everything fell into place much more easily. If you've never seen anything like this before, an approximation like that might seem completely out of left field.

If you graph along with this function , they do seem rather close to each other for small angles near 0, but how would you even think to make this approximation? And how would you find this particular quadratic?

The study of Taylor series is largely about taking non-polynomial functions, and finding polynomials that approximate them near some input. The motive is that polynomials tend to be much easier to deal with than other functions: They're easier to compute, easier to take derivatives, easier to integrate... they're just all around friendly.

Approximating

Let's look at the function , and take a moment to think about how you might find a quadratic approximation near . That is, among all the polynomials that look for some choice of the constants , and , find the one that most resembles near .

First of all, at the input the value of is , so if our approximation is going to be any good at all, it should also equal when you plug in .

Plugging in just results in whatever is, so we can set that equal to .

This leaves us free to choose constant and to make this approximation as good as we can, but nothing we do to them will change the fact that the output of our approximation at is equal to .

Different choices for now that is locked in place.

It would also be good if our approximation had the same tangent slope as at . Otherwise, the approximation drifts away from the cosine graph faster than it needs to as tends away from . The derivative of is , and at that equals , meaning its tangent line is flat.

This is the same as making the derivative of our approximation as close as we can to the derivative of our original function .

Setting equal to ensures that our approximation matches the tangent slope of at this point.

This is the same approximation we just had! But we should feel confident that the process is working, because our approximation now equals the value and slope of a , leaving us free to change .

Different choices for now that and are locked in place.

We can take advantage of the fact that the cosine graph curves downward above , it has a negative second derivative. Or in other words, even though the rate of change is at that point, the rate of change itself is decreasing around that point.

Specifically, since its derivative is its second derivative is , so at its second derivative is .

Just as we wanted the derivative of our approximation to match that of cosine, we'll also make sure that their second derivatives match so as to ensure that they curve at the same rate. The slope of our polynomial shouldn't drift away from the slope of any more quickly than it needs to.

We can compute that at , the second derivative of our polynomial is . In fact, that's its second derivative everywhere, it is a constant. To make sure this second derivative matches that of , we want it to equal , which means . This locks in a final value for our approximation:

To get a feel for how good this is, let's try it out for

That's pretty good!

Take a moment to reflect on what just happened. You had three degrees of freedom with a quadratic approximation, the coefficients in the expression .

- was responsible for making sure that the output of the approximation matches that of at .

- was in charge of making sure the derivatives match at that point.

- was responsible for making sure the second derivatives match up.

This ensures that the way your approximation changes as you move away from , and the way that the rate of change itself changes, is as similar as possible to behavior of , given the amount of control you have.

Better Approximations

You could give yourself more control by allowing more terms in your polynomial and matching higher-order derivatives of . For example, let's say we add on the term for some constant .

If you take the third derivative of a cubic polynomial, anything quadratic or smaller goes to . As for that last term, after three iterations of the power rule it looks like .

On the other hand, the third derivative of is , which equals at , so to make the third derivatives match, the constant should be .

In other words, not only is the best possible quadratic approximation of around , it's also the best possible cubic approximation.

You can actually make an improvement by adding a fourth order term, . The fourth derivative of is also , which equals at . And what's the fourth derivative of our polynomial with this new term?

When you keep applying the power rule over and over, with those exponents all hopping down to the front, you end up with , which is .

So if we want this to match the fourth derivative of , which at is , then we must set .

This polynomial is a very close approximation for around and for any physics problem involving the cosine of some small angle, for example, predictions would be almost unnoticeably different if you substituted this polynomial for .

Generalizing

It's worth stepping back to notice a few things about this process. First, factorial terms naturally come up in this process. When you take derivatives of , letting the power rule just keep cascading, what you're left with is and on up to .

So you don't simply set the coefficients of the polynomial equal to whatever derivative value you want, you have to divide by the appropriate factorial to cancel out this effect. For example, in the approximation for , the coefficient is the fourth derivative of cosine, , divided by .

The second thing to notice is that adding new terms, like this , doesn't mess up what old terms should be, and that's important. For example, the second derivative of this polynomial at is still equal to times the second coefficient, even after introducing higher order terms to the polynomial.

This is because we're plugging in , so the second derivative of any higher order terms, which all include an , will wash away. The same goes for any other derivative, which is why each derivative of a polynomial at is controlled by one and only one coefficient.

controls , controls , controls and so on.

Comprehension Question

Just like , the function is a situtation where we know its derivative and its value at . Let's apply the same process to find the third degree taylor polynomial of .

Using what we know about the derivatives of the function , what are the best possible choices for the coefficients , , and to approximate the function as a third degree polynomial around the point ?

Approximating around other points

If instead, you were approximating near an input other than , like , to get the same effect you would have to write your polynomial in terms of powers of . Or more generally, powers of for some constant .

This makes it look notably more complicated, but it's all to make sure plugging in results in a lot of nice cancelation so that the value of each higher-order derivative is controlled by one and only one coefficient.

Finally, on a more philosophical level, notice how we're taking information about the higher-order derivatives of a function at a single point, and translating it into information (or at least approximate information) about the value of that function near that point. This is the major takeaway for Taylor series: Differential information about a function at one value tells you something about an entire neighborhood around that value.

We can take as many derivatives of as we want, it follows this nice cyclic pattern , , , , and repeat.

So the value of these derivative of have the cyclic pattern , , , , and repeat. And knowing the values of all those higher-order derivatives is a lot of information about , even though it only involved plugging in a single input, .

That information is leveraged to get an approximation around this input by creating a polynomial whose higher order derivatives, match up with those of , following this same , , , cyclic pattern.

To do that, make each coefficient of this polynomial follow this same pattern, but divide each one by the appropriate factorial which cancels out the cascading effects of many power rule applications. The polynomials you get by stopping this process at any point are called "Taylor polynomials" for .

Other Functions

More generally, if we were dealing with some function other than cosine, you would compute its derivative, second derivative, and so on, getting as many terms as you'd like, and you'd evaluate each one at .

Then for your polynomial approximation, the coefficient of each term should be the value of the -th derivative of the function at , divided by .

When you see this, think to yourself that the constant term ensures that the value of the polynomial matches that of at , the next term ensures that the slope of the polynomial matches that of the function, the next term ensure the rate at which that slope changes is the same, and so on, depending on how many terms you want.

The more terms you choose, the closer the approximation, but the tradeoff is that your polynomial is more complicated. And if you want to approximate near some input other than , you write the polynomial in terms of instead, and evaluate all the derivatives of at that input .

This is what Taylor series look like in their fullest generality. Changing the value of changes where the approximation is hugging the original function; where its higher order derivatives will be equal to those of the original function.

A meaningful example

One of the simplest meaningful examples is to approximate the function , around the input . Computing its derivatives is nice since the derivative of is also , so its second derivative is also , as is its third, and so on.

So at the point , all of the derivatives are equal to . This means our polynomial approximation looks like , and so on, depending on how many terms you want. These are the Taylor polynomials for .

Infinity and convergence

We could call it an end here, and you'd have a phenomenally useful tool for approximations with these Taylor polynomials. But if you're thinking like a mathematician, one question you might ask is if it makes sense to never stop, and add up infinitely many terms.

In math, an infinite sum is called a "series", so even though one of the approximations with finitely many terms is called a "Taylor polynomial" for your function, adding all infinitely many terms gives what's called a "Taylor series".

You have to be careful with the idea of an infinite sum because one can never truly add infinitely many things; you can only hit the plus button on the calculator so many times. The more precise way to think about a series is to ask what happens as you add more and more terms. If the partial sums you get by adding more terms one at a time approach some specific value, you say the series converges to that value.

It's a mouthful to always say "The partial sums of the series converge to such and such value", so instead mathematicians often think about it more compactly by extending the definition of equality to include this kind of series convergence. That is, you'd say this infinite sum equals the value its partial sums converge to.

For example, look at the Taylor polynomials for , and plug in some input like .

As you add more and more polynomial terms, the total sum gets closer and closer to the value . The precise-but-verbose way to say this is "the partial sums of the series on the right converge to ." More briefly, most people would abbreviate this by simply saying "the series equals ."

In fact, it turns out that if you plug in any other value of , like , and look at the value of higher and higher order Taylor polynomials at this value, they will converge towards , in this case .

This is true for any input, no matter how far away from it is, even though these Taylor polynomials are constructed only from derivative information gathered at the input . In a case like this, we say equals its Taylor series at all inputs . This is a somewhat magical fact! It means all of the information about the function is somehow captured purely by higher-order derivatives at a single input, namely .

Limitations for

Although this is also true for some other important functions, like sine and cosine, sometimes these series only converge within a certain range around the input whose derivative information you're using. If you work out the Taylor series for around the input , which is built from evaluating the higher order derivatives of at , this is what it looks like.

When you plug in an input between and , adding more and more terms of this series will indeed get you closer and closer to the natural log of that input.

But outside that range, even by just a bit, the series fails to approach anything. As you add more and more terms the sum bounces back and forth wildly. The partial sums do not approach the natural log of that value, even though the is perfectly well defined for .

In some sense, the derivative information of at doesn't propagate out that far. In a case like this, where adding more terms of the series doesn't approach anything, you say the series diverges.

That maximum distance between the input you're approximating near, and points where the outputs of these polynomials actually do converge, is called the "radius of convergence" for the Taylor series.

What is the Taylor Series expansion of the function around the point ?

Summary

There remains more to learn about Taylor series, their many use cases, tactics for placing bounds on the error of these approximations, tests for understanding when these series do and don't converge. For that matter there remains more to learn about calculus as a whole, and the countless topics not touched by this series.

The goal with these videos is to give you the fundamental intuitions that make you feel confident and efficient learning more on your own, and potentially even rediscovering more of the topic for yourself. In the case of Taylor series, the fundamental intuition to keep in mind as you explore more is that they translate derivative information at a single point to approximation information around that point.

Thanks

Special thanks to those below for supporting the original video behind this post, and to current patrons for funding ongoing projects. If you find these lessons valuable, consider joining.