Chapter 5Backpropagation calculus

The hard assumption here is that you’ve read the previous part, giving an intuitive walkthrough of the backpropagation algorithm. Here, we get a bit more formal and dive into the relevant calculus. It’s normal for this to be a little confusing, so be sure to pause and ponder throughout.

As a quick reminder, backpropagation is an algorithm for calculating the gradient of the cost function of a network.

The entries of the gradient vector are the partial derivatives of the cost function with respect to all the different weights and biases of the network, so it’s really those derivatives that backpropagation helps us find.

In the last lesson we talked about the intuitive feeling you might have for how backpropagation works, so now our focus will be on connecting that intuition with the appropriate calculus.

(For those uncomfortable with the relevant calculus, I do have a whole series on the topic.)

The main goal here is to show how people in machine learning commonly think about the chain rule in the context of networks, which can feel a bit different than how most introductory calculus courses approach the subject.

Calculating the Gradient with Backpropagation

Let’s start with an extremely simple network, where each layer has just one neuron.

We’ll start with a very simple neural network.

This network is determined by 3 weights (one for each connection) and 3 biases (one for each neuron, except the first), and our goal is to understand how changing each of them will affect the cost function. That way we know which adjustments will cause the most efficient decrease to the cost.

How strongly do these six weights and biases affect the value of the cost function?

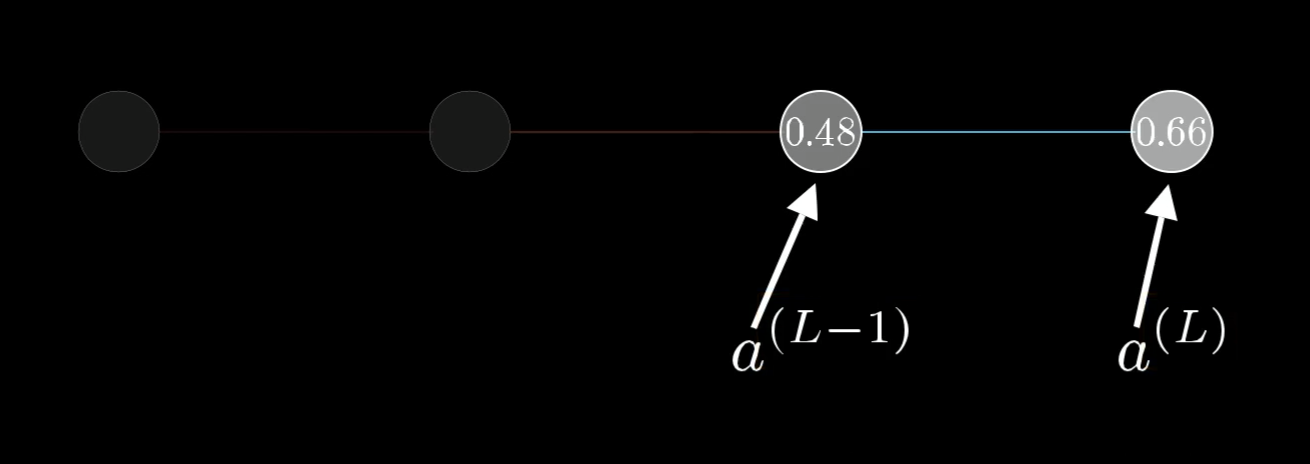

For now, let’s just focus on the connection between the last two neurons. I’ll label the activation of that last neuron with a superscript , indicating which layer it’s in, so the activation of the previous neuron is :

We label the activations of the neurons based on which layer they’re in, where is the last layer of the network.

To be clear, these are not exponents. They are just ways of indexing what layer we’re talking about, since we want to save subscripts for different indices later on.

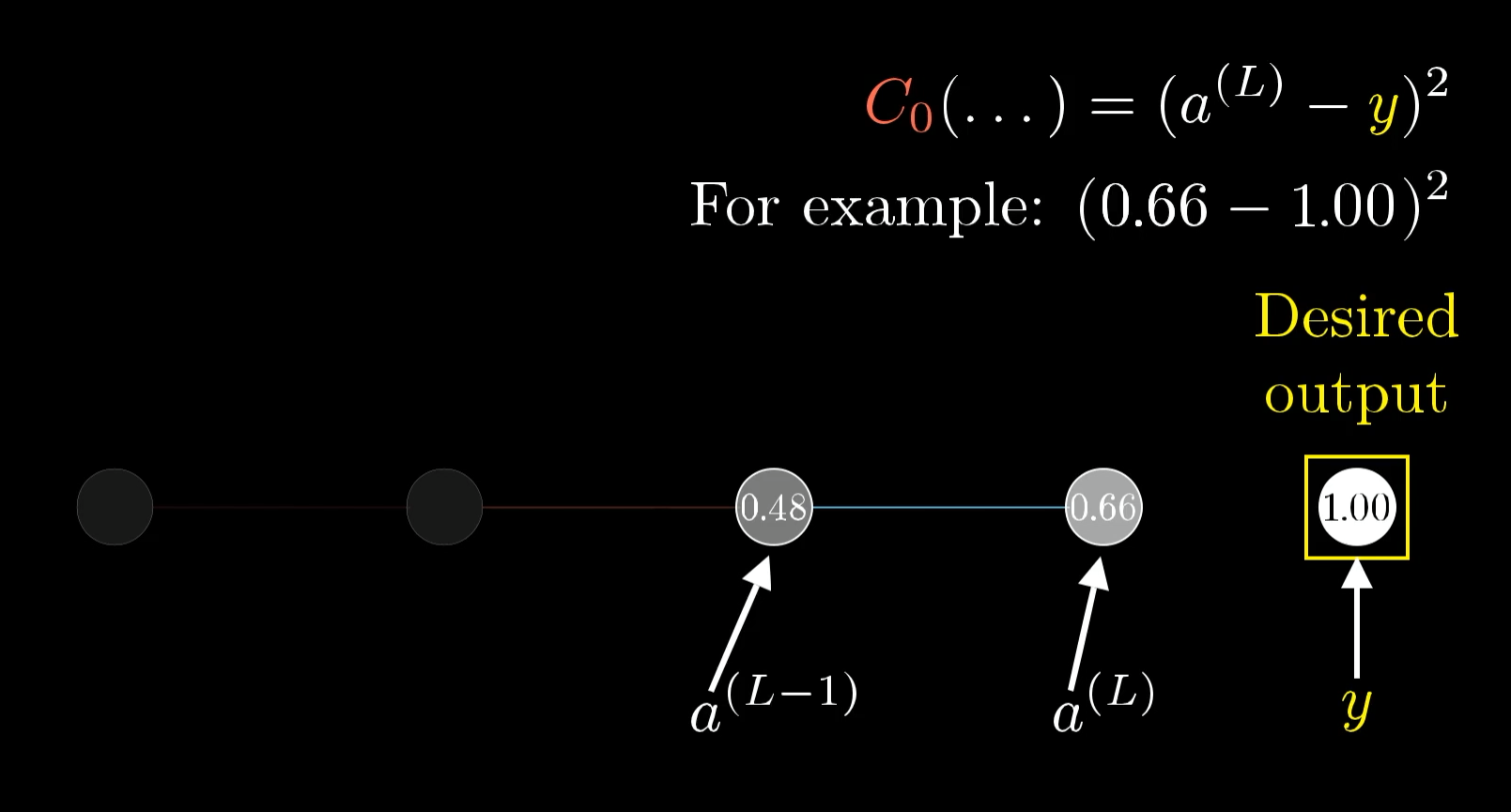

Let’s say that for a certain training example, the desired output is . That means that the cost for this one training example is .

The cost to the network for this one training example is just the difference between the actual output and the desired output squared.

As a reminder, this last activation is determined by a weight, a bias, and the previous neuron’s activation, all pumped through some special nonlinear function like a sigmoid or a ReLU.

It’ll make things easier for us to give a special name to this weighted sum, like , with the same superscript as the activation:

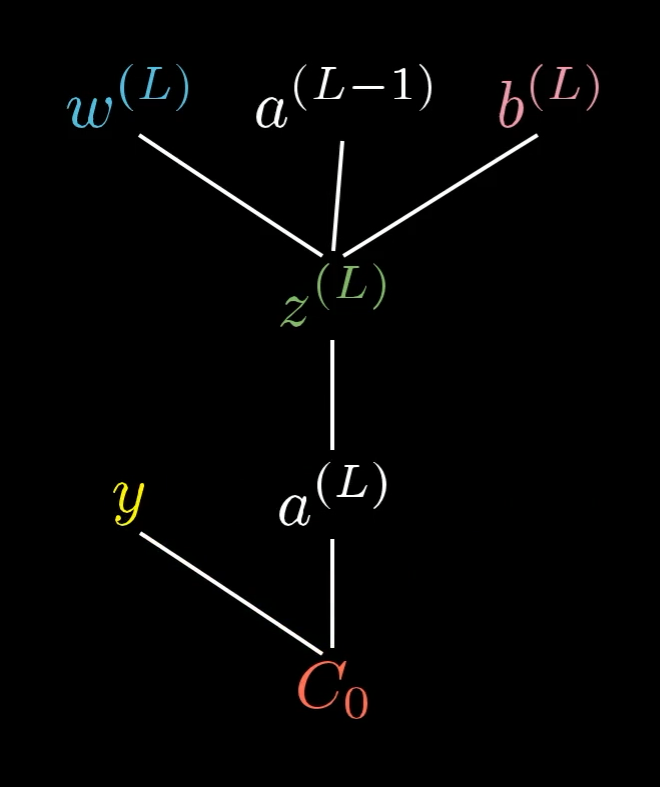

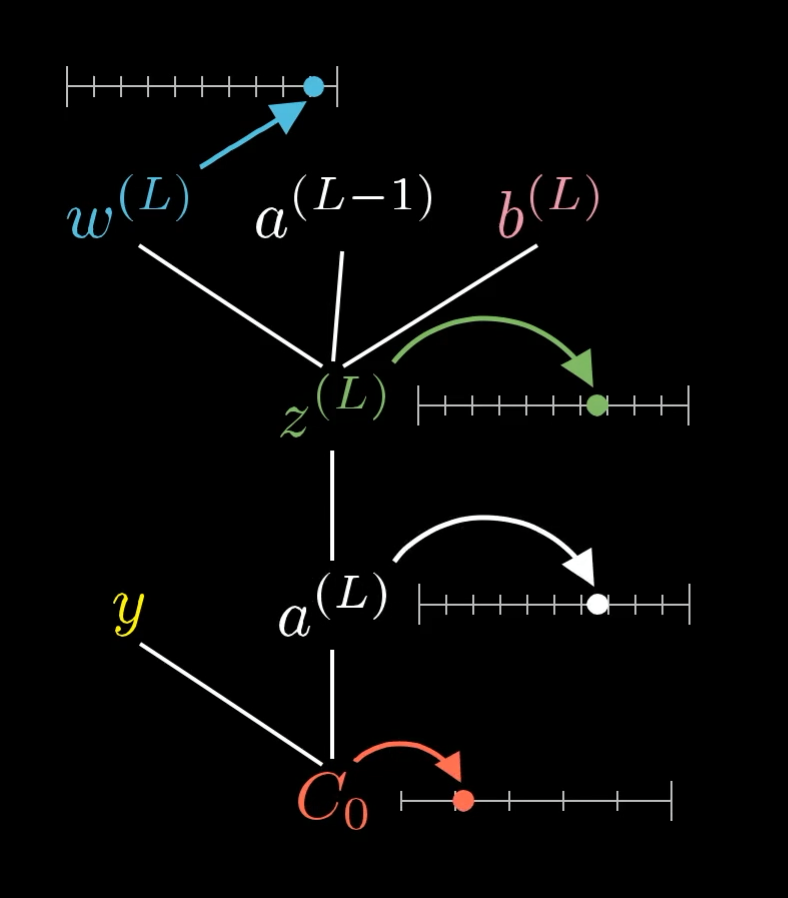

A way you might conceptualize this is that the weight, the previous activation, and the bias together let us compute , which in turn lets us compute , which in turn, along with the constant , lets us compute the cost.

This tree shows which values depend on which other values in our network.

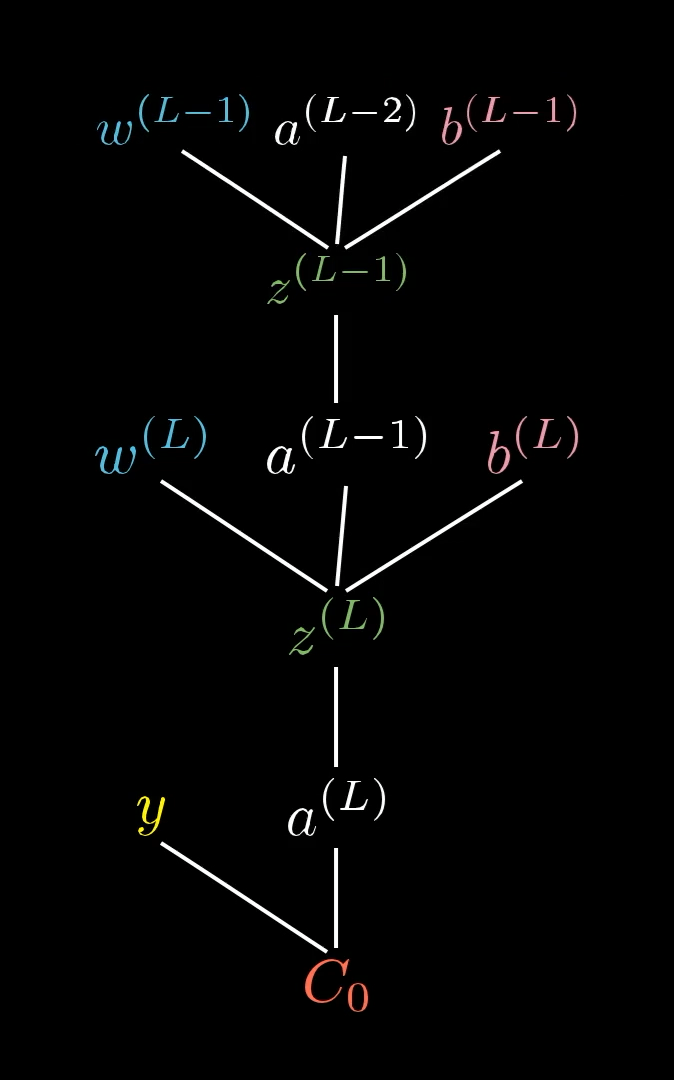

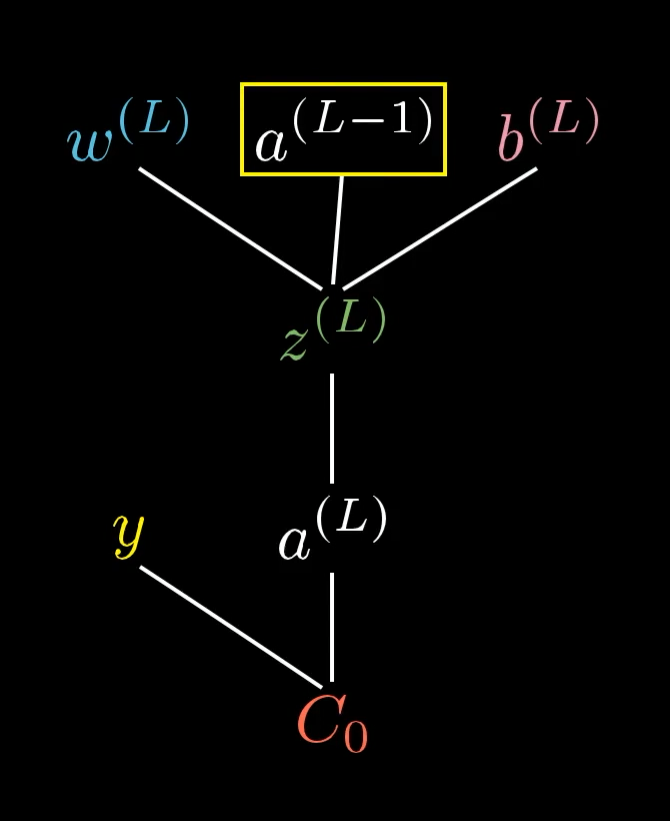

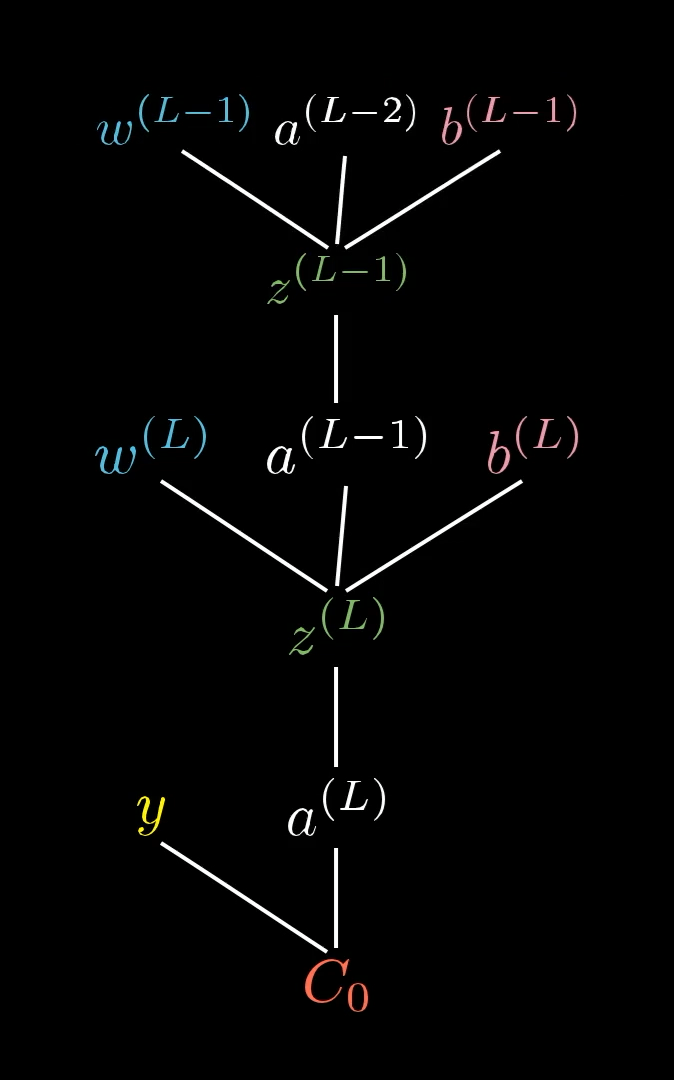

And of course, is influenced by its own weight and bias, which means our tree actually extends up higher...

...but we won’t focus on that right now.

All of these are just numbers, so it can be nice to think of each variable as having its own little number line.

Each of these variables has a value that we can place on its own little number line.

Computing The First Derivative

Our first goal is to understand how sensitive the cost is to small changes in the weight . That is, we want to know the derivative .

When you see this term, think of it as meaning “some tiny nudge to ”, like a change by 0.01. And think of this term as meaning “whatever the resulting nudge to the cost is.” We want their ratio.

Conceptually, this tiny nudge to causes some nudge to , which in turn causes some change to , which directly influences the cost, .

The nudge to has a chain of effects which eventually causes a nudge to .

So we break this up by first taking the ratio of a tiny change to to the tiny change in . That is, the derivative of with respect to . Likewise, consider the ratio of a tiny change to to the tiny change in that caused it, as well as the ratio between the final nudge to and this intermediate nudge to .

This is the chain rule, where multiplying these three ratios gives us the sensitivity of to small changes in .

The Constituent Derivatives

We’ve broken down the derivative we actually want, , into its constituent parts. Now we just need to compute the values of the three individual derivatives that make it up.

To compute each derivative, we’ll use some relevant formula from the way we’ve defined our neural network.

As you can see, each derivative is pretty straightforward once you know which equation to start from.

It’s worth taking a moment to reflect on what these expressions actually mean. Notice the first derivative we computed, . It’s telling us that changing the weight has a stronger effect on (and therefore a stronger effect on the cost) when the previous neuron is more active. This is where that biological idea that “neurons that fire together, wire together” is mirrored in our math.

Also pay attention to the final derivative, . It’s proportional to the difference between the actual output and the desired output. This means that when the actual output is way different than what we want it to be, even small nudges to the activation stand to make a big difference to the cost.

Putting It All Together

We originally broke down into three separate derivatives using the chain rule:

Putting this together with our constituent derivatives, we get:

This formula tells us how a nudge to that one particular weight in the last layer will affect the cost for that one particular training example. This is just one very specific piece of information, and we’ll need to calculate a lot more to get the entire gradient vector.

But the good news is that we’ve already laid the foundations for the rest of the work that needs to be done. Now it’s just a process of generalizing our results until we have an understanding (in the form of the gradient vector) of how all the weights and biases in the network affect the overall cost.

The Cost for All Training Data

Notice the little subscript zero in the derivative we calculated, . That’s there because is just the cost of a single training example.

The full cost function for the network () is the average all the individual costs for each training example:

So if we want the derivative of with respect to the weight (rather than the derivative of with respect to the weight), we need to take the average of all the individual derivatives:

This expression tells us how the overall cost of the network will change when we wiggle the last weight.

Recall that the entries of the gradient vector are the partial derivatives of the cost function with respect to every weight and bias in the network. So this derivative, , is one of its entries!

The Derivative for All Weights And Biases

But to compute the full gradient, we will also need all the other derivatives with respect to all the other weights and biases in the entire network.

Let’s work on computing the bias in the last layer next.

The Bias

The good news is that the sensitivity of the cost function to a change in the bias is almost identical to the equation for a change to the weight.

As a reminder, here’s the equation we found earlier for the derivative with respect to the weight in the last layer:

And here’s the new equation for the derivative with respect to the bias in the last layer (instead of the weight):

All we’ve done is replaced the with a .

Luckily, this new derivative is simply :

So the derivative for the bias turns out to be even simpler than the derivative for the weight.

That’s two gradient entries taken care of.

Previous Layers’ Weights and Biases

We’ve now determined how changes to the last weight and last bias in our super-simple neural network will affect the overall cost, which means we already have two of the entries of our gradient vector.

All the other weights and biases lie in earlier layers of the network, meaning their influence on the cost is less direct. The way we handle them is to first see how sensitive the cost is to the value of that neuron in the second-to-last layer, , and then to see how sensitive that value is to all the preceding weights and biases.

We still need to figure out what to do with the activation of the neuron in the previous layer.

Unsurprisingly, the derivative of the cost with respect to that activation looks very similar to what we’ve seen already:

To solve it, we just need to figure out the value of , which we can do by reminding ourselves of the definition of .

So when the activation in the second-to-last layer is changed, the effect on will be proportional to the weight.

But we don’t really care about what happens when we change the activation directly, because we don’t have control over that. All we can change, when trying to improve the network through gradient descent, is the values of the weights and biases.

The trick here is to remember that the activation in the previous layer is actually determined by its own set of weights and biases. In reality, our tree stretches back farther than we’ve shown so far:

The activation in the second-to-last layer is determined by its own weights and biases. The plot thickens.

This is where the propagation backwards comes in. Even though we won’t be able to directly change that activation, it’s helpful to keep track of, because we can just keep iterating this chain rule backwards to see how sensitive the cost function is to previous weights and biases.

For example, there is a long chain of dependencies between the weight and the cost . The way this will show up mathematically is that the partial derivative of the cost with respect to that weight will look like a long chain of partial derivatives for each intermediate step.

How would you break down the derivative into bite-size steps using the chain rule? Take a moment to trace it out. (It might be helpful to refer back to the extended tree image above.)

These are the derivatives that trace a path through the tree connecting to . (It's okay if you wrote yours in a different order; we're just multiplying them all together.)

By tracking the dependencies through our tree, and multiplying together a long series of partial derivatives, we can now calculate the derivative of the cost function with respect to any weight or bias in the entire network. We’re simply applying the same chain rule idea we’ve been using all along!

And since we can get any derivative we want, we can compute the entire gradient vector. Job done! At least for this network.

More Complicated Networks

So that’s how you get the relevant derivatives for this super simple network.

You might think that this is an overly simple example, because each layer has just one neuron, and that it’s going to get dramatically more complicated for a real network. But honestly, not that much changes when we give the layers multiple neurons; it’s just a few more indices to keep track of really.

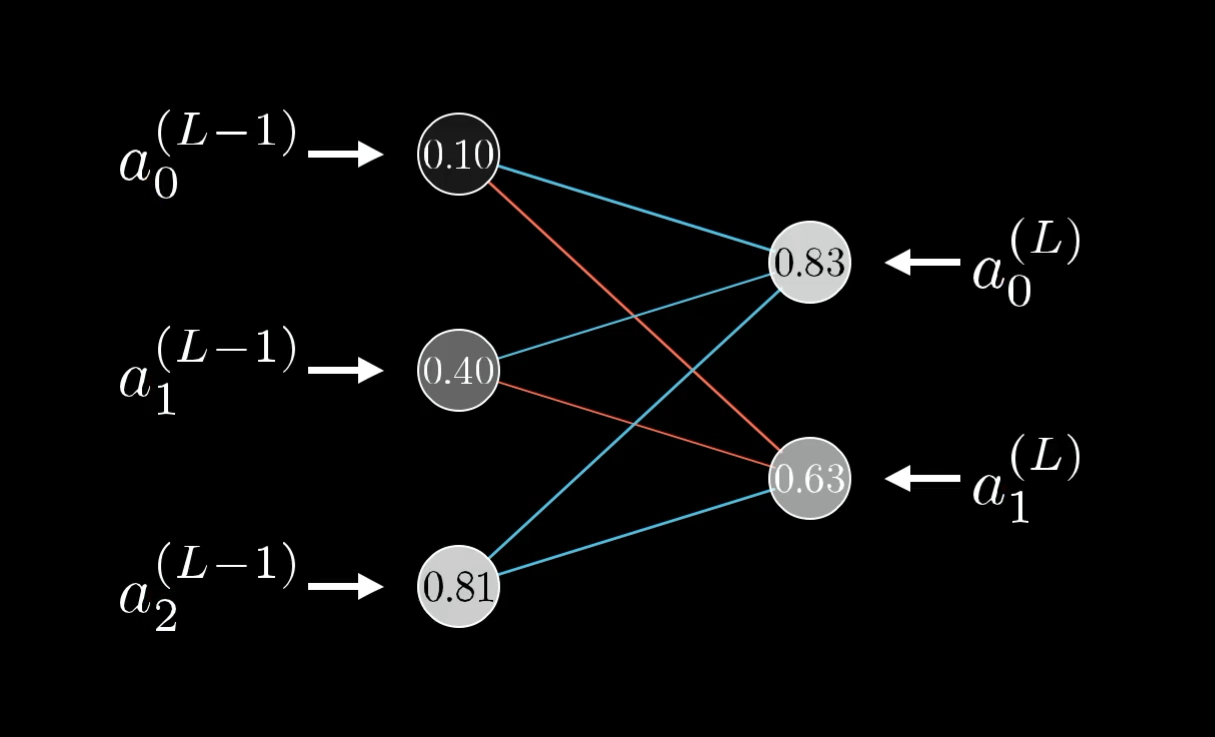

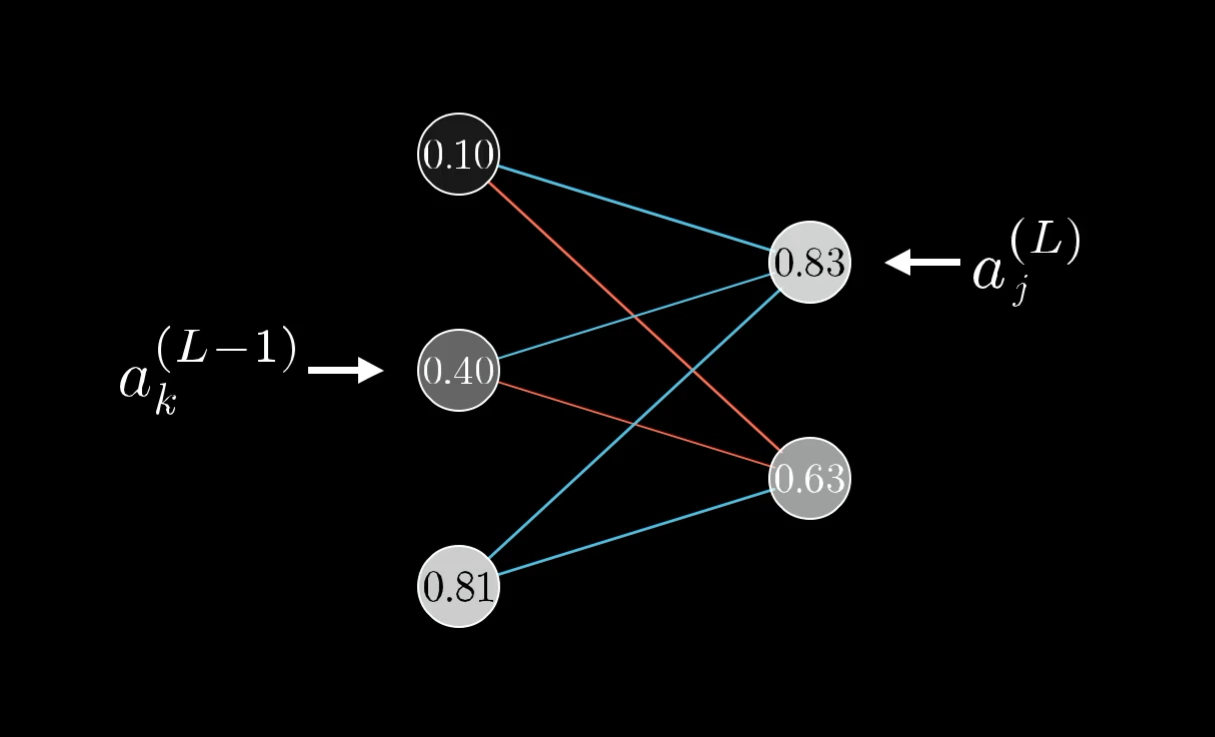

Rather than the activation in a given layer simply being , it will also have a subscript indicating which neuron of the layer it is.

The superscript on each neuron indicates which layer it’s on, and the subscript indicates which neuron it is.

Let’s use the letter to index the layer , and to index the layer .

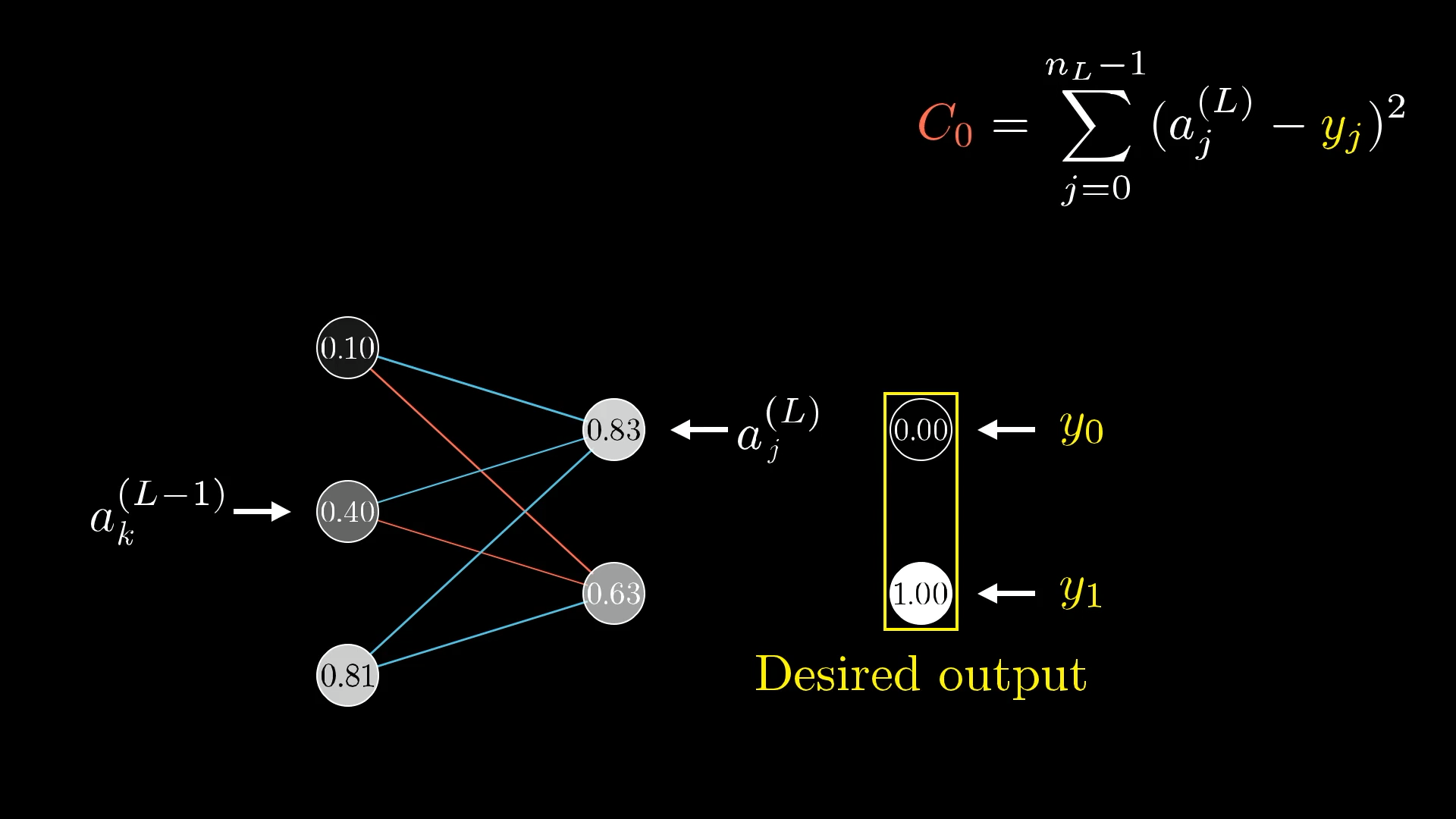

For the cost, again look at what the desired output is, then add up the squares of the differences between these last layer activations and this desired output. That is, sum over .

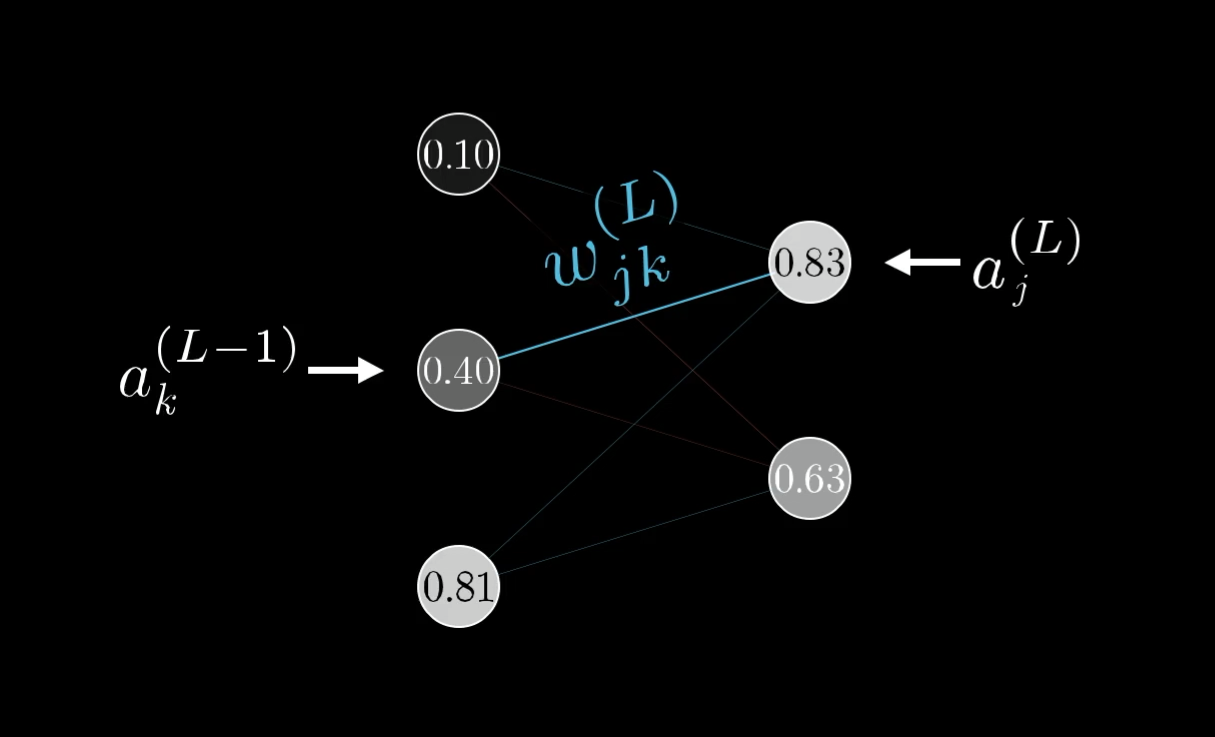

Each weight now needs a few more indices to keep track of where it is, so let’s call the weight of the edge connecting this th neuron to this th neuron :

Those indices, , might feel backwards at first, but it lines up with how you’d index the weight matrix I talked about in part 1 of this series.

It’s still nice to give a name to the relevant weighted sum, like .

We still wrap this weighted sum with a special function like a sigmoid or a ReLU to get the final activation.

The new equations we have are all essentially the same as what we had in the one-neuron-per-layer case. Indeed, the chain-rule derivative expression describing how sensitive the cost is to a particular weight is essentially identical to what we had before.

The only difference is that we’re keeping track of more indices, and , which tell us which weight specifically we’re talking about. After all, there are now many weights connecting the second-to-last layer to the last.

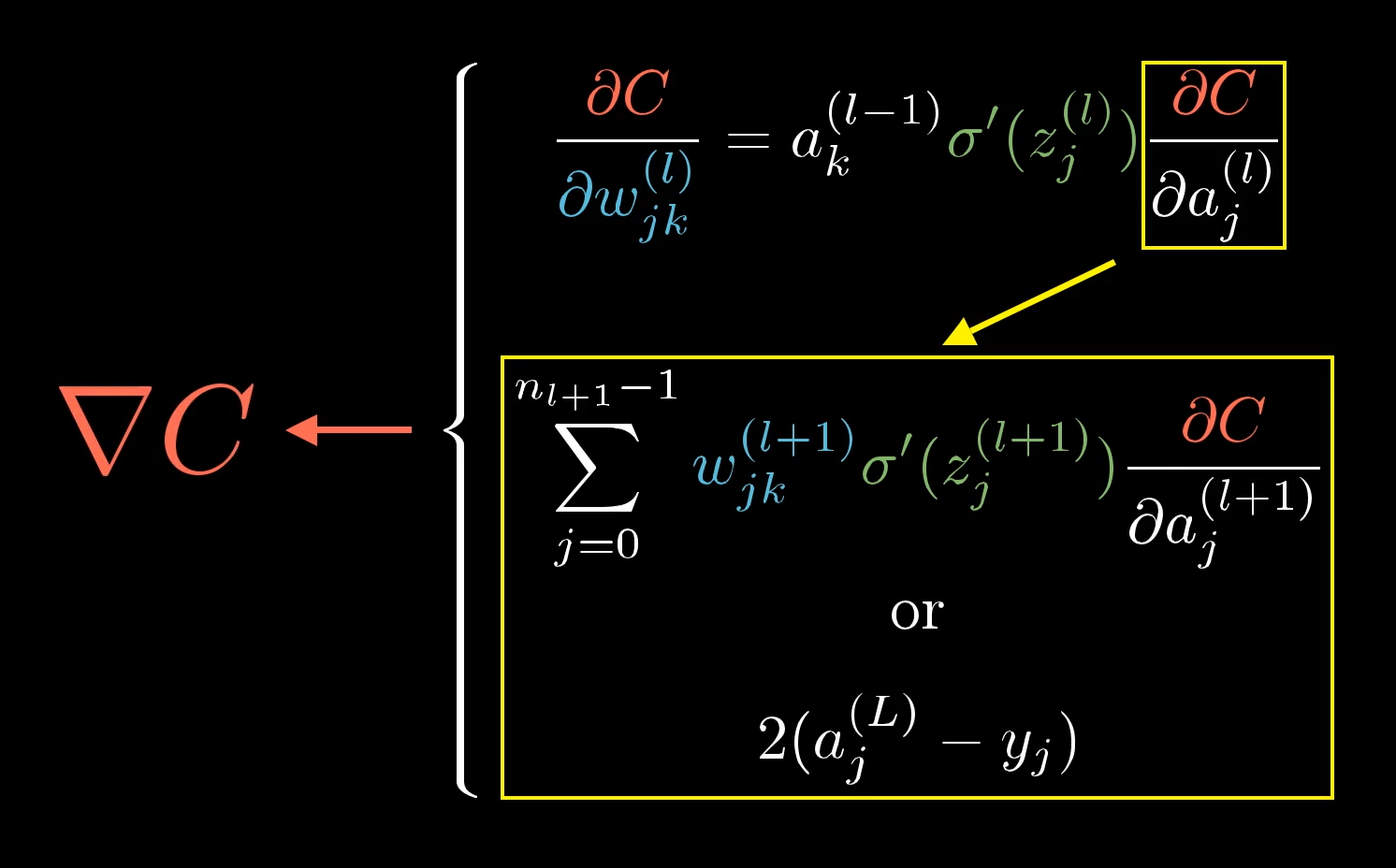

What does change, though, is the derivative of the cost with respect to one of the activations in the layer , .

This neuron influences the cost function through multiple paths.

The activation in one layer affects all the activations in the next layer.

So to understand the sensitivity of the cost function to this neuron, you have to add up the influences along each of those different paths. That is, on the one hand, it influences , which plays a role in the cost function, but on the other hand it influences , which also plays a role in the cost function.

Because this previous-layer neuron influences the cost along multiple different paths, the derivative of the cost with respect to this neuron, the sensitivity of to changes in , involves adding up multiple different chain rule expressions corresponding to each path of influence.

And that’s… pretty much it. Once you know how sensitive the cost function is to activations in this second-to-last layer, you can just repeat the process for all the weights and biases feeding into that layer.

Conclusion

So pat yourself on the back! If this all makes sense, you have now looked into the heart of backpropagation, the workhorse behind how neural networks learn.

A large portion of the math for calculating the gradient using backpropagation is captured in this image.

These chain rule expressions are the derivatives that make up the gradient vector, which lets us minimize the cost of the network by repeatedly stepping downhill. If you sit back and think about it, that’s a lot of layers of complexity to wrap your mind around, and it takes time for your mind to digest it all.

That's a lot to think about!

These formulas are usually encapsulated more neatly into a few nice vector expressions, so all the messy indices aren’t out in the open.

Given that you’d typically have the actual implementation handled by a library for you, the most important and generalizable understanding here is how you can reason about the sensitivity of one variable to another by breaking down the chain of dependencies.

Stepping back, if you take away just one idea from this series, I want you to reflect on how even relatively simple pieces of math, like matrix multiplication and derivatives, can enable you to build genuinely incredible technology when put into the right context.

Think about how matrix multiplication elegantly captures the propagation of information from one layer of neurons to the next. Think about how we can take the somewhat fuzzy idea of intelligence, or at least the narrow sliver of intelligence required to classify images correctly, and turn it into a piece of calculus by finding the minimum of a carefully defined cost function. Think about how derivatives and gradients give us a concrete way to find such a minimum (well, a local minimum anyway). Or think about the chain rule, which in most calculus classes comes across as “just one of those tools” that you need for more homework problems, and how in this context it let us cleanly decompose an insanely complicated network of influences to understand how sensitive that cost function is to each and every weight and bias.

Additional Resources

This marks the end of our series together, but there is always more to learn! If you want to dive even deeper into the calculus of backpropagation, I recommend these resources:

- http://neuralnetworksanddeeplearning.com/chap2.html

- https://github.com/mnielsen/neural-networks-and-deep-learning

- http://colah.github.io/posts/2015-08-Backprop/

And if you're interested in diving deeper into this topic as a whole, I highly recommend the book by Michael Nielsen on deep learning and neural networks. In it, you can find the code and data to download to play with for this exact example, and he walks you through step-by-step what that code is doing.

What’s awesome is that the book is free and publically available, so if you get something out of it, consider joining me in making a donation to Nielsen’s efforts.

Also check out Chris Olah's blog. His post on Neural networks and topology is particularly beautiful, but honestly all of the stuff there is great. And if you like that, you'll love the publications at Distill.

For more videos, Welch Labs also has some great series on machine learning:

"But I've already voraciously consumed Nielsen's, Olah's and Welch's works," I hear you say. Well well, look at you then. That being the case, I might recommend that you continue on with the book "Deep Learning" by Goodfellow, Bengio, and Courville.

Thanks

Special thanks to those below for supporting the original video behind this post, and to current patrons for funding ongoing projects. If you find these lessons valuable, consider joining.