Chapter 4Matrix multiplication as composition

"It is my experience that proofs involving matrices can be shortened by 50% if one throws the matrices out."

— Emil Artin

Recap

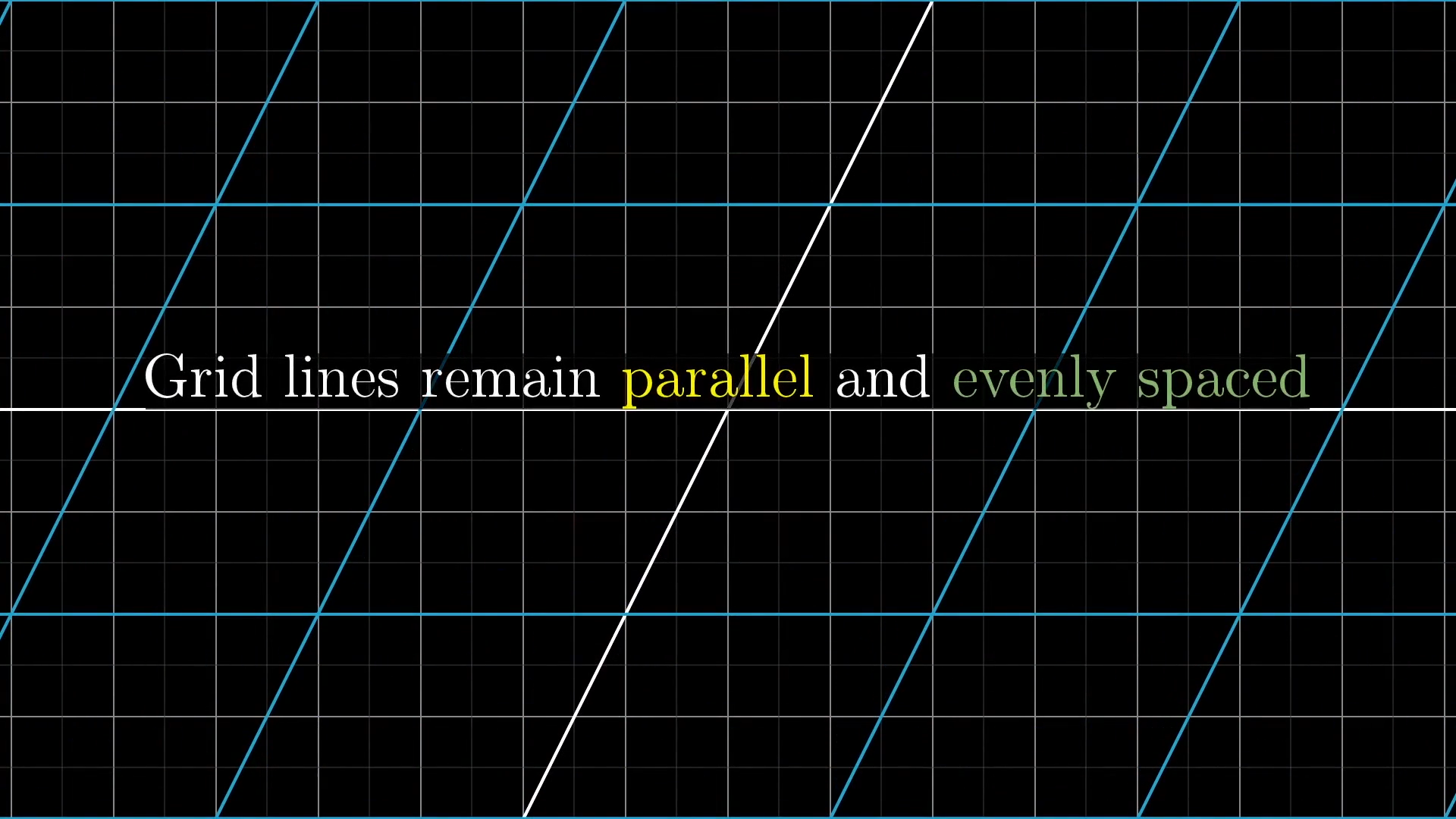

In the previous lesson we showed how linear transformations are just functions with vectors as inputs and vectors as outputs. However it is often more convenient to think of linear transformations as moving space in a way that keeps gridlines parallel and evenly spaced.

We can determine the entirety of the transformation by where the basis vectors land after the transformation, which form the columns of a matrix. To find where any arbitrary vector lands after the transformation, there is an operation called matrix-vector multiplication. Here is the computation for a 2D matrix and vector:

Composition of Transformations

Oftentimes, you find yourself wanting to describe the effects of applying one linear transformation, then applying another. For example, maybe you want to describe what happens when you rotate the plane counterclockwise, then to apply a shear. The overall effect here, from start to finish, is another linear transformation, distinct from the rotation and the shear. This new linear transformation is commonly called the “composition” of the two separate transformations we applied.

Like any linear transformation, it can be described with a matrix all of its own by following and .

In this example, the ultimate landing spot for after both transformations is , so make this the first column of a matrix. Likewise, ultimately ends up at , so make this the second column of a matrix. This new matrix captures the overall effect of applying a rotation then a shear, but as one single action rather than two successive ones.

Composition is Multiplication

Here’s one way to think about that new matrix. If you were to take some vector and pump it through the rotation then the shear, the long way to compute where it lands by first multiplying on the left by the rotation matrix, then multiplying the result on the left by the shear matrix. This is, numerically speaking, what it means to apply a rotation then a shear to a given vector. But whatever you get should be the same as just multiplying this new composition matrix that we found by the vector, no matter what vector you chose, since this new matrix is supposed to capture the same overall effect as the rotation-then-shear action.

Based on how things are written down here, I think it’s reasonable to call that new matrix the product of the two original matrices, don’t you?

We can think about how to compute that product more generally in just a moment, but it’s easy to get lost in the forest of numbers. Always remember that multiplying two matrices like this has the geometric meaning of applying one transformation after another.

One thing that’s kind of weird is that this has us reading right to left. You first apply the transformation represented by the matrix on the right, then you apply the transformation represented by the matrix on the left. This stems from function notation, since we write functions on the left of the variable, so composition always has to be read right-to-left. Good news for Hebrew readers, bad news for the rest of us.

Computing the New Matrix

Let’s look at another example. There are two matrices and which perform different transformations:

The total effect of applying , then gives a new transformation, so let’s find its matrix.

But let’s see if we can do it without any images, and instead just using the numerical entries in each matrix.

First we need to figure out where goes. After applying , the new coordinates of are given be the first column of . To see what happens after applying , multiply the matrix for by the first column of . Working it out the way I described in the last chapter, you’ll get the vector .

Likewise, we can apply the same operation to find where goes after both transformations.

Multiplying these two matrices yields

General Form

Let's go through that same process again, but this time the entries will be variables in each matrix, just to show that the same line of reasoning works for any matrices. This is more symbol heavy, and will require some more room, but it should be satisfying for anyone who has previously been taught matrix multiplication the traditional way.

To follow where goes, start by looking at the first column of the matrix on the right, since this is where initially lands. Multiplying that column by the matrix on the left is how you can tell where that intermediate version of ends up after applying the second transformation. So the first column of the product matrix will always equal the left matrix times the first column of the right matrix.

Similarly, will always initially land on the second column of the right matrix, so multiplying the left matrix by this second column will give the final location of , and hence the second column of the product matrix.

It’s common to be taught this formula as something to memorize, along with a certain algorithmic process to remember it.

Multiplying these two matrices yields

I really do believe that before memorizing that process, you should get in the habit of thinking about what matrix multiplication really represents: applying one transformation after another. Trust me, this will give you the conceptual framework that makes the properties of matrix multiplication easier to understand.

Noncommutativity

Here is an important question: Does it matter what order we put the two matrices in?

Well, let’s think of a simple example like the one from earlier. Take a shear, which fixes and smooshes over to the right, and a rotation.

The overall effect is very different, so it would appear that order totally does matter! By thinking in terms of transformations, that’s the kind of thing you can do in your head by visualizing, no matrix multiplication necessary!

When multiplying these two matrices

Associativity

I remember when I first took linear algebra, there was a homework problem that asked to prove that matrix multiplication is associative. That means if you have three matrices, A, B and C, and multiply them all, it shouldn’t matter if you first compute A times B, then multiply the result by C, or if you first multiply B times C, then multiply the result by the matrix A on the left. In other words, does it matter where you put the parentheses.

Now, if you try to work through this numerically, like I did back then, it’s horrible! And unenlightening for that matter. But when you think of matrix multiplication as applying one transformation after another, this property is trivial, can you see why?

What it’s saying is that if you first apply (C then B), then A, it’s the same as applying C, (then B then A). I mean, there’s nothing to prove! You’re just applying three things one after the other, all in the same order!

This might feel like cheating, but it’s not. It’s an honest-to-goodness proof that matrix multiplication is associative. And even better than that, it’s a good explanation for why that property should be true.